AI, Grief, and the Future of Humanity: A Black Mirror Episode and the Rise of Liquid Neural Networks

I recently watched Black Mirror Season 2, Episode 1 (I know, I’m a little late to the game). Here’s a quick spoiler-free overview of the episode from IMDb:

After learning about a new service that lets people stay in touch with the deceased, a lonely, grieving Martha reconnects with her late lover.

This episode originally premiered on February 11, 2013, and it’s fascinating how much of the technology discussed, particularly involving AI, could be achieved today using large language models and remote capabilities.

🚨 SPOILER ALERT: The following contains spoilers for the episode! 🚨

In the episode, Martha (played by Hayley Atwell) loses her boyfriend, Ash (played by Domhnall Gleeson). Without her permission, a friend signs her up for a software that allows her to communicate with her late boyfriend. Initially, it’s just a software-based interaction, but later in the episode, the technology is used to create a synthetic body resembling Ash.

While the physical replication feels like science fiction, the software part is remarkably plausible today thanks to advancements in large language models. These AI systems can analyze and replicate someone’s communication style based on data — like messages, social media posts, and emails.

However, Martha eventually notices significant differences. The AI version of Ash doesn’t argue or disagree with her, which underscores its limitations. It lacks the nuance of a real person, reducing Ash to a projection of his data rather than capturing the complexity of his personality.

11 Years Later: A Fresh Perspective on Black Mirror’s ‘Be Right Back’

Seeing the episode 11 years after its premiere highlights how much technology has evolved in a decade, showcasing both its potential and its unsettling implications. The ability to replicate human behavior through AI, powered by advanced language models, raises profound ethical and existential concerns. It challenges our understanding of life and death — concepts Steve Jobs famously summarized when he said, Death is very likely the single best invention of life*. The idea of individuals *living on through AI stirs moral dilemmas around consent: Would the deceased have agreed to this? Could such simulations occur without their permission? If so, isn’t that inherently wrong?

While AI can simulate human responses, it cannot yet replicate genuine emotions or consciousness. Despite remarkable progress, even today’s most sophisticated systems have clear limitations. This brings up a deeper concern — the role of technology in shaping human behavior. Social media, for example, offers a curated illusion of perfection, often exacerbating mental health struggles. The pressure to conform to perceived ideals discourages diverse opinions, creating echo chambers where dissent feels taboo.

The emotional power of AI cannot be ignored. For many, the prospect of speaking to a lost loved one again could outweigh moral concerns. A decade ago, the emergence of advanced systems like OpenAI’s ChatGPT seemed unimaginable. As AI continues to evolve, it might soon simulate human communication so convincingly that the line between reality and simulation becomes blurred. This could worsen unresolved ethical dilemmas and harm mental health.

Grieving the loss of a loved one is a deeply personal and necessary process — it takes time, but it’s how we eventually heal and move forward. If AI offers the illusion of bringing someone back, it could disrupt this natural process, much like how social media distorts reality. While the future of AI is both exciting and terrifying, we must confront these challenges carefully to ensure that progress doesn’t come at the expense of our humanity

The Road to Artificial General Intelligence (AGI): How Far Are We?

The episode also sparks questions about the future of AI. While large language models have advanced dramatically, true Artificial General Intelligence (AGI) — a system with human-like reasoning, learning, and adaptability — remains elusive.

There’s no single definition of AGI, with tests like the Turing Test and the Coffee Test offering different benchmarks. Despite this ambiguity, several fields are likely to contribute to AGI’s development, one of them being neuroscience

Future Architectures That Could Lead to AGI

Understanding the human brain’s architecture and cognitive processes is key. Insights from neuroscience could inspire more advanced AI models capable of mimicking human intelligence. Future innovations may include brain-inspired architectures, hybrid systems combining symbolic and connectionist AI, and novel approaches to learning and adaptability.

Liquid Neural Networks

Liquid Neural Networks (LNNs), introduced in a 2020 paper, represent a significant step forward in AI, drawing inspiration from the human brain. To understand LNNs, it’s essential to first grasp the architecture of the brain and its underlying principles.

The human brain consists of billions of neurons interconnected to form intricate networks. Each neuron processes information and communicates with others through synaptic connections. This interconnected structure of neurons inspired the development of artificial neural networks (ANNs) in the 1950s.

A key property of the brain that LNNs emulate is neural plasticity — the ability of neurons to adapt and specialize in response to specific tasks and stimuli, such as walking, talking, or learning new skills. Similarly, in Liquid Neural Networks, individual neurons are modeled as dynamic systems capable of continuous adaptation to new inputs. This flexibility allows LNNs to excel in learning complex, time-dependent tasks, making them highly suitable for real-world applications like robotics and autonomous systems.

This adaptability fundamentally alters the architecture and mathematics of LNNs, differentiating them from traditional neural networks. While I have not yet delved into the research paper to explore the detailed mathematics, I will provide a link at the end of this blog for readers interested in understanding the mathematical foundations behind LNNs.

Understanding Liquid Neural Networks Architecture

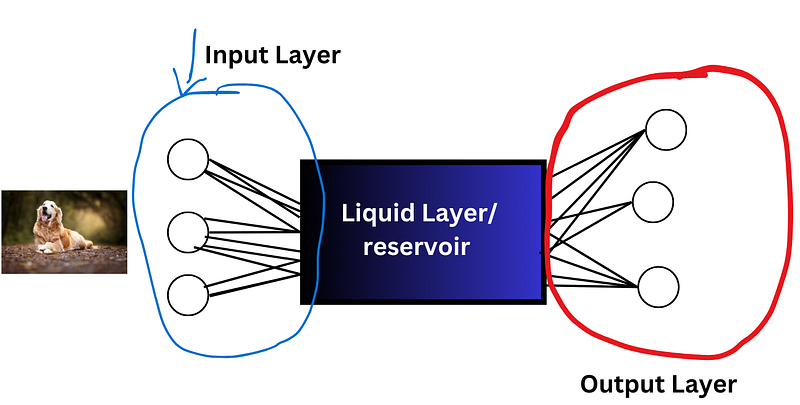

The above image shows the architecture of Liquid Neural Networks (LNNs). The main component in this architecture is the liquid layer or reservoir.

The liquid layer or reservoir is a large Recurrent Neural Network (RNN). Think of the reservoir as water — when given an input, it creates a ripple effect that generates the reservoir state. These reservoir states help identify complex patterns in the input.

Next comes the output state or readout layer, which maps the reservoir state through complex linear and non-linear transformations to produce the final output.

Advantages of Liquid Neural Networks

Requires fewer parameters and less computational power

Uses less memory

Dynamic neural network architecture

Use Case of Liquid Neural Network

There are many use cases for Liquid Neural Networks (LNNs), one of the most promising being their potential to build more adaptive and friendly AGI robots and self-driving cars. A key limitation with current self-driving systems and robots is their difficulty in adapting to new, unpredictable situations. However, because LNNs are dynamic, they offer the ability to adjust and learn in real time, addressing this major concern and paving the way for more intelligent, responsive machines.

Limitation of Liquid Neural Network

One of the major limitations of LNNs is that they are relatively new, and it will take time to determine whether they are truly worth investing in. Additionally, while LNNs excel with time-related data, traditional neural networks remain superior for non-time-series data. In summary, most limitations of LNNs boil down to one fundamental challenge: they are in their infancy and need time to mature and develop.

Conclusion

The Black Mirror Season 2, Episode 1 made me reflect deeply on the future of AI and AGI — a field I am fascinated by. However, as I mentioned earlier, for AGI to make meaningful progress, advancements in neuroscience and quantum computing are essential. While it’s exciting to witness breakthroughs like LNNs, much of this remains theoretical. In practice, achieving these advancements could take another 50 to 60 years.

References:

YouTube Video: The Future of AI Looks Like THIS (& It Can Learn Infinitely) by AI Search. Available at: https://www.youtube.com/watch?v=biz-Bgsw6eE&t=1350s

Research Paper: Liquid Neural Networks: A New Paradigm for Machine Learning by Ramin Hasani, Mathias Lechner, Alexander Amini, Daniela Rus, Radu Grosu. Available at: https://arxiv.org/abs/2006.04439

TV Show: Black Mirror, Season 2, Episode 1, “Be Right Back” (2013). Available on Netflix.